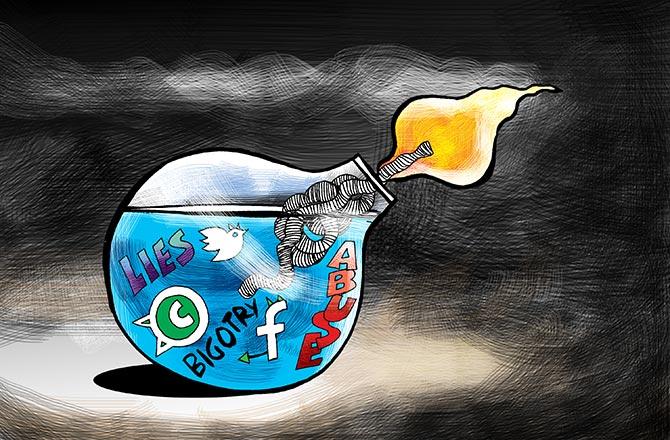

A Molotov cocktail of lies, abuse and bigotry is blowing up social media, discovers Arundhuti Dasgupta, Neha Alawadhi and Romita Majumdar.

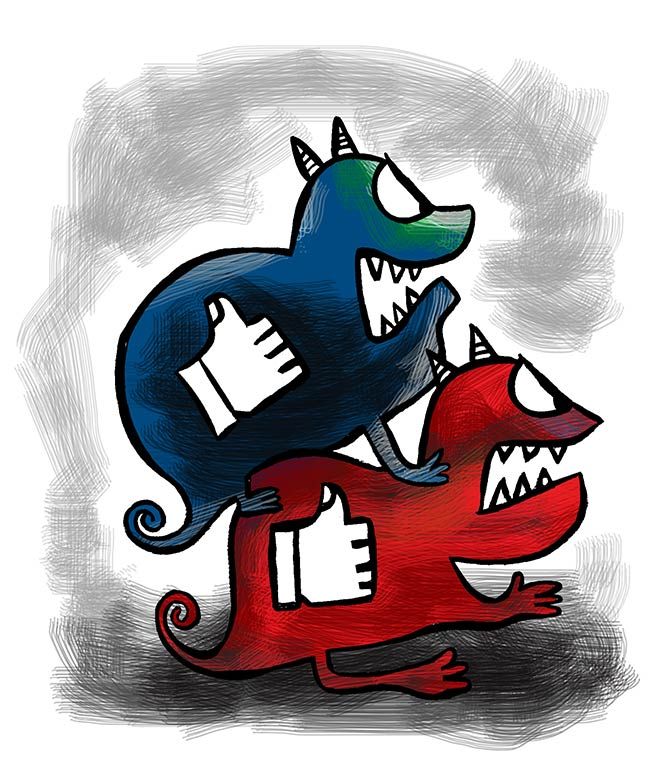

Illustrations: Uttam Ghosh/Rediff.com

Her nightmare never lets up. It makes her shrink in public and weep in private. Her life turned upside down after an abusive boyfriend posted nude photographs of her online.

By the time social media moderators took them down, the clips had been viewed several hundred times.

Removing them provided no relief either, because the Internet is relentless in its distribution of information -- like the demon that rises from each drop of his blood, so do the bytes and pixels.

"She is traumatised, doesn't leave her home without her face covered," says Prashant Mali, a Mumbai-based cybersecurity lawyer.

This was not the first violation committed by the man mentioned earlier. He had done the same to another woman, who woke up one morning to find nude pictures of herself all over her family groups.

This girl is brazening it out, though, facing down trolls with dogged determination.

Not every instance of abuse is as intense or as horrific, nor is every victim either a bundle of nerves or steel. Trolling scars are often invisible.

Sharmila Banwat, a Mumbai-based clinical psychologist, says, "The psychological effects of experiencing online trolling (OT) can be considered similar to the psychological effects of offline harassment or bullying. OT is an addiction (for abusers) and needs to be treated on the same lines."

Online abusers wield hate with pride. They use it to intimidate and bully as much as they do to gather their tribe across geographies. The communities of hate feed off each other and multiply with every like, share or retweet.

Hate is recyclable too. Abusers rake up old images and posts to fuel fresh conflict or hurl new obscenities at their targets.

In the ongoing slugfest at Jawaharlal Nehru University, for instance, fact-checking Web site Boomlive found that Raman Malik, spokesperson for the Bharatiya Janata Party in Haryana, had posted an image of a young woman with a bottle of alcohol next to another holding up a protest placard.

The juxtaposition implied that the two were the same, hinting that if she spent less on a profligate lifestyle the woman could pay her university fees. Boomlive found the two images were not of the same person.

N S Nappinai, Supreme Court advocate and cyber law expert, sees a fundamental disconnect in the way abuse and hate are treated.

"Social media platforms must note that protection against victimisation through their platforms is a duty and not a favour to the user," she says.

While conducting research for her book Technology Laws Decoded in 2015, Nappinai came across videos depicting unspeakable crimes on women on social media sites.

By the time YouTube took them down, the videos had been seen 650,000 times. "The road towards victim protection is still long and requires socially responsible processes within such platforms and more effective enforcement mechanisms to expedite takedowns and remedial action," she says.

Social media networks employ artificial intelligence and a band of human moderators to weed out the hateful and the obscene.

Some moderators are employed in-house, but most are part of an outsourced team at a consulting organisation, which could be based in the US or at call centres, say, in India or the Philippines.

Sara T Roberts, professor at the department of information studies at the University of California Los Angeles, who has just published a book, Behind the Screen: Content Moderation in the Shadows of Social Media, writes that moderators work under tremendous pressure.

"They act quickly, often screening thousands of images, videos, or text postings a day. And, unlike the virtual community moderators of an earlier internet and of some prominent sites today, they typically have no special or visible status to speak of within the Internet platform they moderate. Instead, a key to their activity is often to remain as discreet and undetectable as possible."

According to a former employee at a social network, moderators follow a punishing schedule. Their breaks are timed and there are tight turnaround schedules.

"There is a turnaround time that moderators are expected to follow -- 80 seconds for a job. This becomes a deliverable and their remuneration is based on this," she says. (Facebook and Twitter have in their official blog posts denied the existence of turnaround times.)

Hate doesn't just trash the lives of users; it also affects those whose job it is to set up protective guardrails for users.

Content reviewers, moderators, process executives -- these are some of the names they go by -- contend with an abhorrent and Sisyphian chore. It is their lot to deal with an inexhaustible cache of insults and abuse.

The networks are concerned, though. They offer detailed explanations on moderation policies and regularly report on the takedowns and action taken against abusers.

A Facebook spokesperson says, "To ensure that everyone's voice is valued, we take great care to craft policies that are inclusive of different views and beliefs, in particular those of people and communities that might otherwise be overlooked or marginalised."

In India, say industry sources, greater attention is now being paid to casteist slurs and misogynistic statements masquerading as tradition.

In July 2019, Twitter updated its rules to proscribe language that dehumanises others on the basis of religion. It sought public comments on 'a new rule to address synthetic and manipulated media'.

Twitter describes this media as 'any photo, audio, or video that has been significantly altered or fabricated in a way that intends to mislead people or changes its original meaning'. These are sometimes referred to as 'deepfakes' or 'shallowfakes'.

Ellen Silver, vice president of operations at Facebook, recently wrote a blog on security processes at her organisation. She said a team of 30,000 worked on 'safety and security at Facebook. About half of this team are content reviewers -- a mix of full-time employees, contractors and companies we partner with'.

The constant exposure to violence leads to desensitisation and a host of mental health issues for people on the job, says a former employee of Facebook.

Internationally, there has been sustained reporting of the abuse that abuse-trackers undergo.

An investigative report published by media network The Verge revealed how employees at consulting company Cognizant, tasked with moderation for Facebook, said their workplace 'was perpetually teetering on the brink of collapse'.

Workers suffered from post-traumatic stress disorder, the report said. Facebook denied the allegations, but Cognizant announced it was exiting the content moderation business and firing 7,000 workers.

Facebook's Silver had said in her blog post, 'This job is not for everyone -- so to set people up for success, it's important that we hire people who will be able to handle the inevitable challenges that the role presents.'

In India, though, where the job is regularly advertised on search engine portals, you don't hear anyone complain, says the former Facebook employee.

On the contrary, according to Indeed, a recruitment firm, there has been a surge in jobs for content managers and content editors.

January 2018 to January 2019, job postings for 'content manager' and 'content editor' roles saw a 72 per cent increase.

Job postings for these roles have gone up by 27 per cent between January 2016 and January 2019. Job searches for the same have seen an increase of 58 per cent during the same period.

Sashi Kumar, managing director, Indeed India, observes: "Content managers or content editors become custodians of an organisation's image."

Mark Childs, senior vice president (technology vertical), Genpact, said there is growing interest among young job seekers for this role.

Not all moderation jobs are about washing the blood off the Internet. Some moderators are part of teams that manage brand reputations where the abuse is of a different nature, but the process involved in weeding it out follows universal guidelines.

J R K Rao, managing director of digital marketing agency Rage Communications, explains. Companies set up listening posts -- trusted agents or bots -- that flag objectionable content.

The selected post or tweet is vetted by a team of moderators, who run it through a question tree (usually a template that is chopped and changed according to its country of use) and then red-flag it to the network till it is removed or retained.

In social media companies, says a former industry employee, moderators do not see the entire piece of content. One handles captions, while another checks the image and yet another watches the video or reads the post.

This is meant to protect the anonymity of those posting the content and ensure the speedy resolution of issues. The problem, however, is that these systems do not engage with the user and are not set up to react to user complaints.

In the end, they only serve the networks. As Roberts writes, 'The practice (content moderation)... is typically hidden and imperceptible.'

But hate singes all that come in its way, pushing the networks and the people who use them to the edge of darkness.

The story of Arundathi B, a doctoral student at Hyderabad University, is a case in point. Arundathi participated in a 'Kiss of Love' protest organised at Hyderabad University a few years ago.

Soon after, morphed pictures and lewd messages flooded her pages. "Since I had kissed in public, the virtual molesters thought I was 'readily available'," she says.

But when she began to name and shame the perpetrators they began apologising profusely. Not many can do that, though, and Banwat says many do not recover from online abuse.

"Victims experience sleep disturbance, emotional distress, sub-optimal functioning, shame, embarrassment and in worst cases even consider suicide," she says.

Many have sworn off social media as a result of the abuse. Actor Manoj Bajpayee said recently that he keeps his online presence to a minimum.

Author Manu S Pillai says that social media brings out the worst in people. "These people have nothing to lose; for them it's a 280 character tweet."

Besides, social media companies chase profits, he says; the more scandal and controversy there is, the more people join the platform.

Pillai says the recent exodus of Twitter users to Mastodon owed to the callous manner in which platforms treat trolling issues.

Mastodon is an "alternative to Twitter and a lot of open source technologists were promoting it for a while," says Srinivas Kodali, a volunteer with the Free Software Movement of India.

It attracted attention after Twitter suspended Supreme Court lawyer Sanjay Hegde's account when he posted the image of a German dock worker, August Landmesser, refusing the Nazi salute at a rally. Hegde has served a legal notice on Twitter.

Other microblogging and messaging platforms have also come to the fore, such as Gutrgoo and Mooshak and local language-friendly ShareChat and Helo.

But none is a real alternative, as one of the Internet's oldest users says, because they are simply not as big as Twitter, Facebook or TikTok.

Once their numbers grow to billion-plus members, there will likely be new monsters to confront.

Sundar Sethuraman contributed to this report.

© 2025

© 2025